Governing AI at Scale: Turning Repeatability into Resilience

How governance keeps AI systems trustworthy and how trust drives adoption and ROI || Edition 15

The AI Scaling Framework: Post 4 of 4 | [The GenAI Divide]

A playbook for selecting, scaling, and sustaining AI in the enterprise

The Economics of AI Risk: Why Foundations Matter More Than Speed

Closing the GenAI Divide: Execution Discipline For Scaling AI Pilots

Governing AI at Scale: Why Sustained Value Demands More Than Deployment (this post)

The Short Version

Governance surfaces risks early and enforces readiness — operational intelligence that prevents costly failures before they happen.

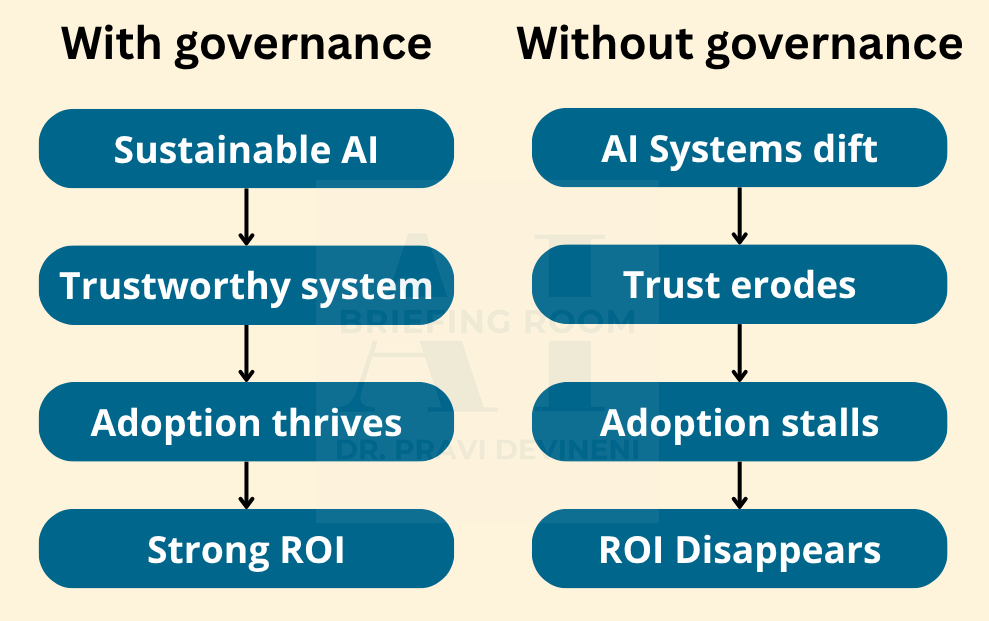

Trustworthy AI drives adoption and ROI. Systems that stay reliable earn user trust, scale usage, and deliver sustained returns.

Governance runs through the full lifecycle — from selection gates to execution enforcement to post-deployment resilience.

Without governance, even well-executed systems decay. Drift goes unnoticed, ownership evaporates, and trust erodes.

Why Governance Matters (and Why AI Keeps Failing Without It)

Most enterprises have learned how to build AI. A few have learned how to scale it. Almost none have learned how to make it operationally durable.

That’s where governance comes in, not as policy or policing, but as the operating discipline that keeps AI systems healthy once the excitement fades. Governance doesn’t slow teams down; it keeps them standing.

Most AI systems don’t fail because they’re technically wrong. They fail because users stop trusting them.

A model drifts, performance degrades, and no one notices for months. Users route around it, adoption collapses, and ROI evaporates.

The executive team sees: “We invested $2 million in AI and got 30 percent adoption.”

What they don’t see: “Governance gaps let the system decay until users lost confidence.”

That’s the trust gap, and it’s where most AI business cases die.

Governance converts momentum into sustainability — the discipline that keeps AI systems accurate, trusted, and supportable as they scale.

What Governance Actually Is (And Isn’t)

When executives hear “AI governance,” they imagine compliance checklists, audit trails, and policy documents that slow everything down.

That’s not governance. That’s documentation.

Real AI governance does three things:

Surfaces risks early — before they become incidents, trust issues, or regulatory failures.

Builds organizational preparedness — so when risks materialize, the organization already knows what to do.

Keeps AI aligned with values and strategy — ensuring decisions remain fair, explainable, and defensible as systems evolve.

Together, these functions make AI systems operationally durable — systems that stay reliable as data shifts, teams change, and policies evolve. Governance doesn’t just preserve models; it sustains the conditions that keep them working.

Sustainable AI is AI that remains accurate, safe, compliant, and economically viable under real-world change. When any part of that ecosystem degrades, the system fails. Governance keeps the ecosystem healthy — and the system alive.

What Governance Produces

Governance enables organizations to operate AI safely at scale. When governance works, key capabilities emerge. For example:

Value Alignment Mechanisms: Continuous fairness, explainability, and privacy checks that evolve as policies and norms change.

Audit Trails: Immutable records of inputs, outputs, and decisions so “what happened and why” can be proven, not guessed.

Incident Response Plans: Rehearsed playbooks for technical, security, or bias incidents, with clear owners and escalation paths.

Security and Safety by Design: Risks surfaced and mitigated from day one: input validation, access controls, model isolation, and threat modeling.

Example:

A manufacturing plant deployed an AI-based predictive-maintenance model to detect equipment faults early. After a routine sensor calibration, the model began missing early warning signals.

Without governance: Months of unnoticed drift led to unplanned downtime, costly repairs, and declining user trust.

With governance: Continuous monitoring caught the drift within 48 hours. Retraining triggered through a pre-approved path, rollback completed before any operational impact. No downtime. No erosion of confidence.

Governance didn’t slow them down, it kept them operationally stable.

The AI Governance Stack: From System to Board

Effective governance isn’t a committee, it’s a stack of accountability that scales from individual systems to the board.

System Level: Product & ML leads govern model quality, bias, and explainability.

Operational Level: Ops & risk teams govern data access, retraining, and security.

Portfolio Level: AI PMO or data office governs dependencies and maturity.

Enterprise / Board Level: CAIO and risk committee govern policy and strategic alignment.

Each layer produces evidence the next consumes: system logs feed operations metrics, operations roll up to portfolio dashboards, portfolios inform board oversight.

When this flows, governance becomes an information supply chain, turning evidence into oversight without slowing velocity.

Governance Across the AI Lifecycle

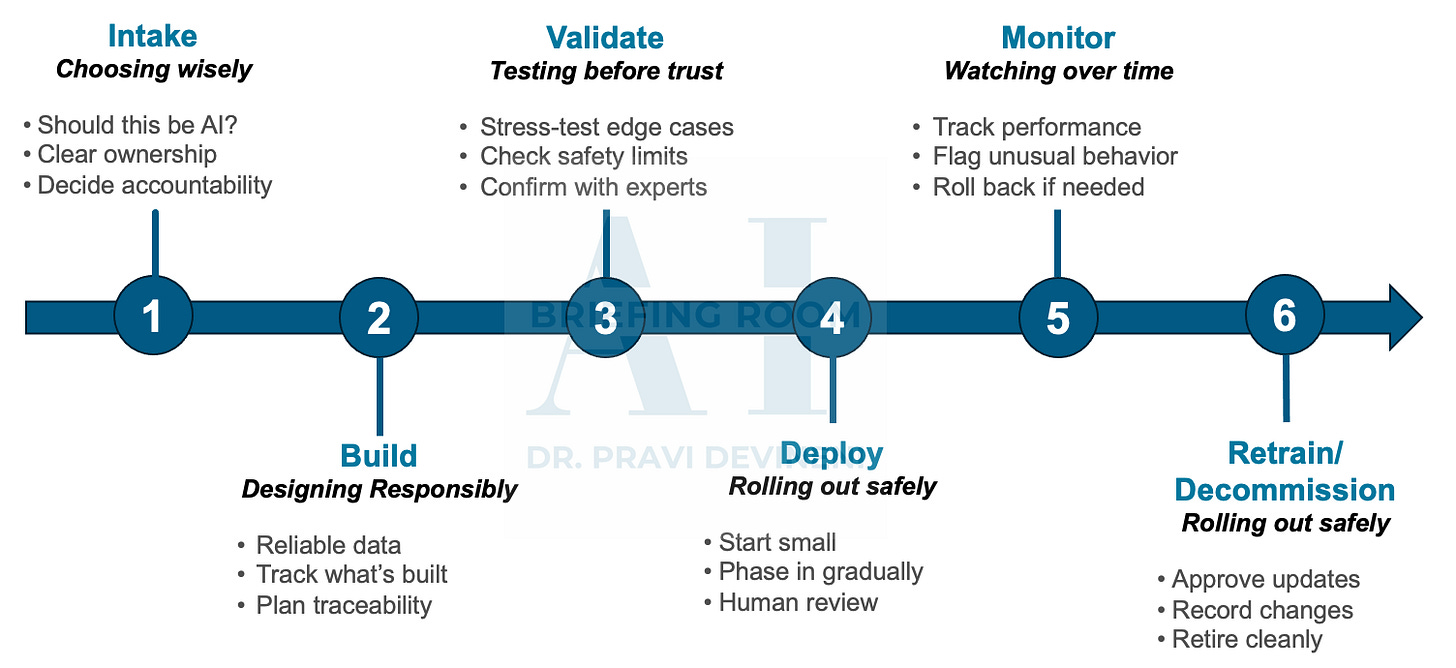

Governance isn’t a final review or a post-deployment checklist — it’s the continuity layer that runs through selection, execution, and sustained operation. Each phase has different governance needs, but together they create a system where AI can scale safely.

In Selection

Before any code is written, it acts as disciplined capital allocation. It enforces the Three Proofs (Value, Scalability, Resilience) as funding gates, approving only projects with measurable impact and controlled risk.

Approves pilots with risk-adjusted business cases

Requires rollback and escalation plans before work begins, creating fail-safe mechanisms

Prevents pilots with no path to production

With governance, selection becomes disciplined capital allocation where risk and return are explicitly balanced.

In Execution

Once a project is funded, it shifts from gate to embedded control. Governance enforces the Readiness Check and embeds controls directly into the delivery pipeline.

Confirms ownership and data rights before launch

Blocks deployment without named owners who have budget authority

Automates fairness and drift tests at every build

Captures evidence for audit artifacts such as lineage, test results, and sign-offs

This transforms execution into repeatable engineering where guardrails are built into the process.

Post-Deployment (Where Most Organizations Fail)

After launch is where governance matters most. Systems that launched perfectly begin to decay: data sources change, owners leave, models drift, resulting in a drop in user adoption.

Post-deployment governance sustains trust through continuous discipline:

Monitors continuously, tracking drift, bias, and adoption continuously

Enforces retraining cadence tied to drift and performance thresholds

Runs incident response drills and quarterly rehearsals

Audits fairness and compliance, ensuring systems evolve with policy changes

Reports portfolio health and AI risk posture to the board

With governance, systems stay trustworthy over time because decay is caught early and addressed systematically.

Once governance runs through the lifecycle, resilience becomes routine.

In Closing

When governance runs through the system, from selection to monitoring to retraining, resilience stops being a goal and becomes a property of how AI operates.

Selection (Post #2) chooses the right bets, ensuring focus and avoiding wasted capital.

Execution (Post #3) makes those bets real, turning pilots into durable products.

Governance (Post #4) keeps them alive, trustworthy, auditable, and ROI-positive.

Trustworthy systems drive adoption. Adoption delivers ROI. That’s the governance value chain.

The enterprises that win won’t be those deploying the most AI. They’ll be the ones whose systems still work a year later, predictable, explainable, and trusted under real-world pressure.