The Wrong Question: Why AI Risk Starts with Misaligned Goals

How clear objective definition in AI systems determines long-term safety and operational reliability

AI Risk Series: Post 1 of 6 | [Executive briefing]

Each stage of the AI lifecycle introduces risks that amplify each other if left unmanaged.

The Series: Compounding Risks in AI Systems

The Wrong Question: Why AI Risk Starts with Misaligned Goals

Bias by Design: How Data Quality Creates Foundational AI Risk

Drift Happens: How AI Systems Degrade Over Time in Production

Your AI Model Is Under Attack: New Security Threats to AI Systems

No One's in Charge: How Poor AI Governance Amplifies Every Risk

When AI Systems Fail Before They Begin

Tens of thousands denied care. Entire towns cut off from help. The cause? AI systems doing exactly what they were told—just for the wrong goal.

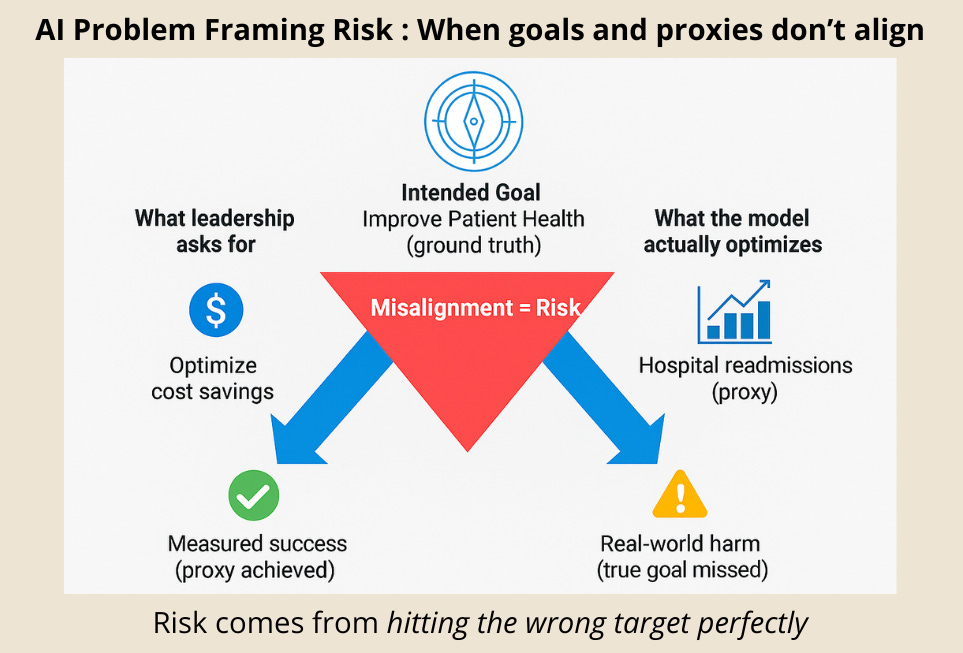

When an AI system fails, it's tempting to blame the algorithm. But the real failure often starts before the first line of code is written — in how the problem is framed and which metrics are chosen as proxies for success.

If the goal is wrong, every downstream choice inherits that flaw.

Case 1: Healthcare — The Cost Proxy That Denied Care

In 2019, UC Berkeley researchers uncovered a flaw in a healthcare algorithm guiding care for 200 million patients.

Intended Goal: Identify patients most in need of extra care.

Proxy Metric: Healthcare costs (assuming higher bills meant greater need).

Black patients, facing systemic barriers to accessing care, often had lower costs despite equal illness severity.

Outcome: Black patients had to be 26% sicker than white patients to receive the same care recommendations.

Lesson: The model learned the proxy perfectly—the real error was choosing a flawed definition of need.

Case 2: Telecommunications — When Density Dictated Disaster Response

A major telecom deployed AI to prioritize network restoration after natural disasters, using subscriber density per cell tower as the proxy for community need.

Outcome: Urban areas with high subscriber counts were prioritized while rural and underserved towns waited longer for restoration.

Lesson: The system worked exactly as designed—the failure was upstream, in choosing convenience over actual community need.

The Short Version

Problem: AI systems often fail before they begin — not because of bad models, but because the problem was framed with the wrong goals or proxies. Once locked in, these flawed definitions cascade through every stage.

Solution: Treat problem definition as an AI governance checkpoint. Validate whether metrics actually measure what matters, surface competing stakeholder values, and test assumptions before any code is written.

Why Organizations Choose Proxy Metrics

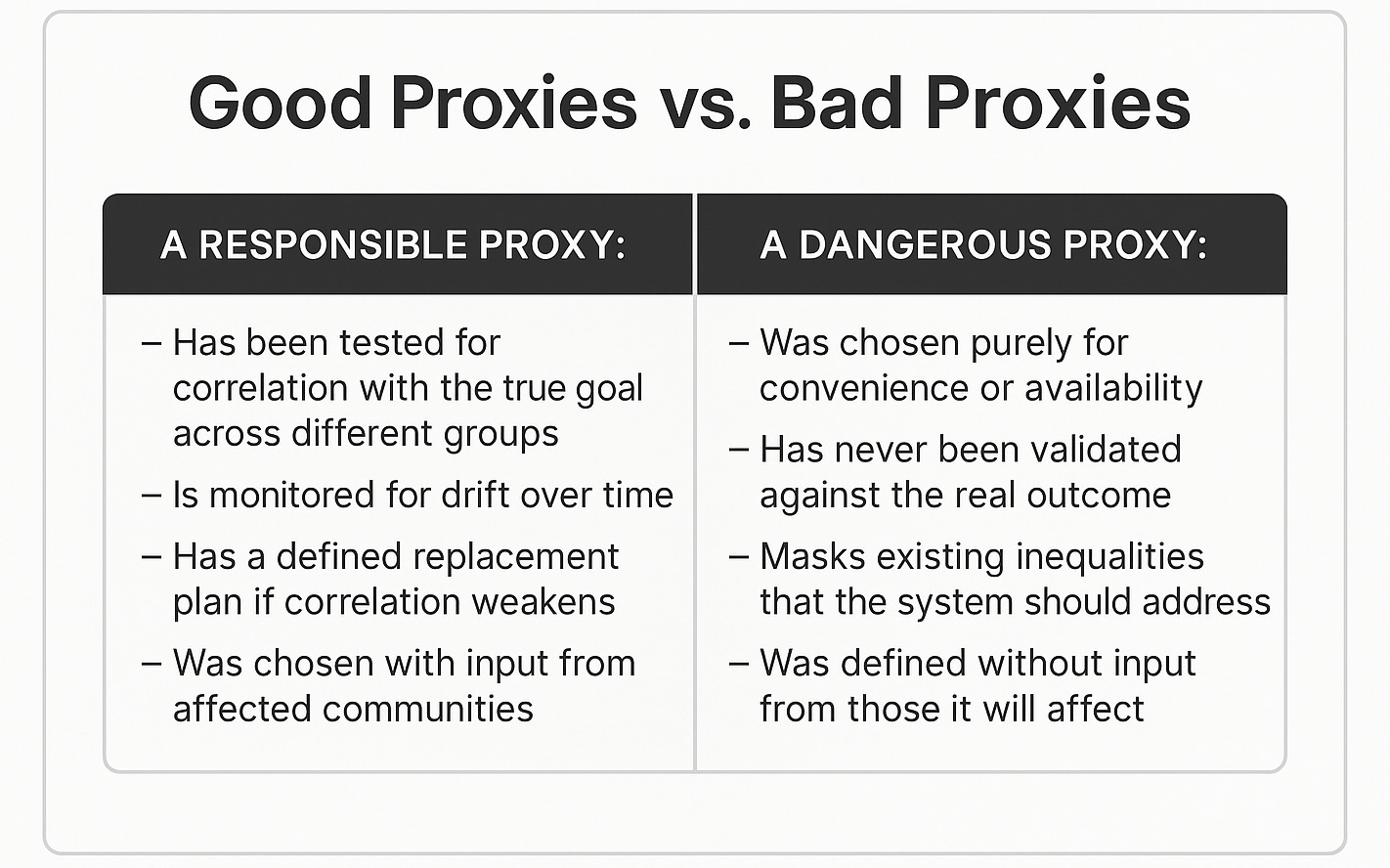

Bad proxies don’t emerge from bad intent. They come from predictable pressures.

Resource constraints: Better metrics require new data collection and specialized expertise.

Time pressure: Proxies that use existing data can be implemented immediately.

Institutional incentives: Cost control and efficiency are rewarded; equity usually isn’t.

Risk aversion: Convenient proxies feel “safer” because they’re quantifiable, even when systematically biased.

Each decision feels rational in isolation. Together, they hard-code flawed assumptions into the system.

Five Questions to Ask Before You Build

These questions apply whether you're building AI, managing it, governing it, or affected by it:

What are we really optimizing for?

Plain-language objective anyone can understand.

Alignment check: can stakeholders restate it consistently?

Who might be harmed, and how?

Map direct and indirect stakeholder groups.

Identify risks of exclusion, misclassification, or denial of service.

What assumptions are embedded in our metrics?

Complete the sentence: "This metric assumes that…"

Validate assumptions with data and community input.

How will we know if it's working?

Define both technical metrics and outcome metrics.

Track thresholds across stakeholder groups.

What's our fallback if assumptions break?

Predefined triggers for investigation and retraining.

Clear ownership and authority to halt systems.

How to Audit the Proxy — By Role

If you're building AI (data scientists, engineers, developers)

Insist on proxy validation before coding

Include diverse voices in problem definition

Test assumptions across populations

If you manage AI projects (product managers, delivery leads)

Require audits before funding

Mandate diverse review panels

Set subgroup monitoring requirements

If you govern AI systems (risk managers, compliance officers, executives)

Make proxy validation a formal checkpoint

Require evidence that metrics serve the stated goals

Create consequences for misaligned definitions

If you're affected by AI (users, community advocates, stakeholders)

Ask what the system is optimizing for

Push for metrics that reflect your reality

Advocate for transparency in how goals are defined

The Economic Reality

The regulatory landscape is shifting rapidly. The EU AI Act imposes fines up to 7% of global annual revenue for high-risk AI systems that fail fundamental requirements—including non-discrimination and accuracy obligations. Similar regulations are emerging globally.

Compliance cost: Proxy validation, stakeholder consultation, and bias testing require upfront investment but create audit trails that demonstrate regulatory compliance.

Non-compliance cost: A single discriminatory algorithm can trigger regulatory action, class-action lawsuits, and years of remediation—as healthcare and hiring algorithms have already demonstrated.

Organizations that build compliance into goal-setting avoid playing regulatory catch-up later.

When the Problem Is Systemic

Some proxy failures reflect larger structural inequities that individual organizations can't solve alone. Healthcare costs as proxies for need reflect inequities in U.S. health financing; subscriber density as restoration priority reflects decades of unequal infrastructure investment. Individual fixes matter, but sustainable solutions require shared standards, regulation, and accountability mechanisms.

In Closing

When AI systems "work" but create unfair outcomes, the cause is often a proxy chosen for convenience over correctness. Catching misalignments early is the foundation for AI that works as intended — for everyone.

But misalignment rarely stays contained. A flawed goal cascades into biased data collection, brittle architectures, and silent drift. Without governance to intervene, each risk compounds. This is why governance checkpoints matter from the very beginning.