Built-In Risk: How AI System Design Shapes Safety Outcomes

How infrastructure and integration choices define your system's resilience—or fragility

AI Risk Series: Post 3 of 6 | [Executive briefing]

Each stage of the AI lifecycle introduces risks that amplify each other if left unmanaged.

The Series: Compounding Risks in AI Systems

The Wrong Question: Why AI Risk Starts with Misaligned Goals

Bias by Design: How Data Quality Creates Foundational AI Risk

Drift Happens: How AI Systems Degrade Over Time in Production

Your AI Model Is Under Attack: New Security Threats to AI Systems

No One's in Charge: How Poor AI Governance Amplifies Every Risk

The Problem: When "good code" Ships a Bad Outcome

If you've ever taken apart a perfectly working gadget and put it back together wrong, you know the feeling: every component works, but the system doesn't. AI is the same. Most organizations frame AI risk as a model problem, but the biggest failures often trace back to how the system was put together.

According to RAND Corporation research, AI projects fail at twice the rate of traditional software projects. The difference often comes down to system design.

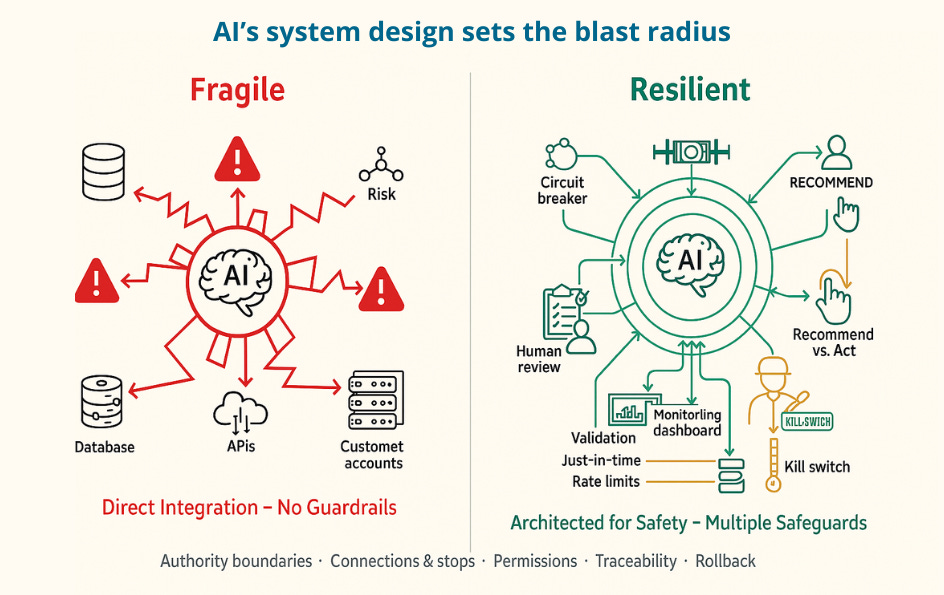

By system design, I mean the practical choices that govern a model's real-world power:

Authority: can it only suggest actions, or can it take them?

Connections: what external systems it can access—and what stops it

Permissions: what access rights it has and for how long

Traceability: the record trail needed to understand what it did

Rollback: testing in stages and having emergency stops

These system design decisions determine whether the misaligned goals (Post 1) and biased data (Post 2) stay contained, or spread across your enterprise. Investigations keep showing the pattern: incidents hinge on integration and authority—not core model math.

Case 1: Autonomous Vehicles — Perception vs. Planning

Uber's self-driving car had two components—perception (detecting objects) and planning (deciding actions), both of which worked correctly when sensors detected pedestrian Elaine Herzberg on March 18, 2018. The fatal flaw was architectural: emergency braking had been disabled during automated operation, with no empowered safety controller to override planning decisions. The car saw Herzberg but couldn't brake in time, striking and killing her.

Lesson: Component accuracy can't compensate for architecture that lacks veto authority and cross-checks.

Case 2: Enterprise Chatbots — Authority Without Boundaries

A Chevrolet dealership deployed a customer service chatbot to handle inquiries about vehicles and pricing—designed to provide helpful information and generate leads. The fatal flaw was architectural: the chatbot was granted authority to make pricing statements and contractual claims without boundaries or human oversight. Someone used prompt injection to manipulate it into offering a car for $1 and claiming the agreement was "legally binding."

Lesson: The risk isn't just "hallucination"—it's authority. Architecture determines blast radius.

Both the use cases the same pattern: working components, architectural authority problems, real-world harm.

The Short Version

Problem: AI systems inherit risk from architecture and deployment as much as from models. Integration patterns, safeguards, and rollout strategies either multiply or mitigate failure.

Solution: Treat system design as a first-class risk surface. Review, test, and govern architecture with the same rigor as models.

Why This Risk Emerges

Speed-to-market: Ship now, harden later

Vendor incentives: Pre-packaged integrations make "direct connect" the default

Cost efficiency: Shared services reduce spend but increase coupling

Accountability gaps: Leaders track model metrics, not system safety

Each shortcut feels rational for individual projects but creates systemic fragility across the enterprise.

Five Questions to Ask About Your System Design

Who owns architectural risk?

Is there clear governance for design approval?

Who has authority to halt deployment for safety concerns?

How are privileges scoped?

Can the model only "recommend," or can it take action?

What's the blast radius of model decisions?

What happens when the model fails?

Is there a fallback process (human override, alternative system)?

How quickly can the system degrade gracefully?

How are components tested in combination?

Do subsystems interact safely under stress?

What happens when individual components fail?

What's the update/rollback plan?

Are rollouts staged and reversible?

How quickly can you revert to a known-good state?

How to Review Architecture — By Role

If you're building AI (data scientists, engineers, developers)

Implement guardrails (least-privilege access, circuit breakers, timeouts)

Run integration stress tests, not just model benchmarks

Document assumptions and failure modes between subsystems

If you manage AI projects (product managers, delivery leads, program directors)

Require red-team testing before any production launch

Stage deployments with canary rollouts and kill switches

Assign clear ownership for architectural risk assessment

If you govern AI systems (risk managers, compliance officers, executives)

Mandate design reviews alongside model reviews

Require evidence of fallback plans and privilege scoping

Audit vendor integrations for hidden risk surfaces

If you're affected by AI (users, community advocates, stakeholders)

Ask about system safeguards and human oversight

Push for transparency in how AI decisions can be challenged

Advocate for staged rollouts that allow feedback and correction

The Economic Reality

Architecture choices determine operational costs for years. Organizations with brittle AI systems face higher operational costs: more frequent outages, longer resolution times, and cascading failures across business units.

Resilience ROI: Investment in fallback mechanisms and circuit breakers pays dividends through higher uptime, faster recovery, and contained failure impact.

Fragility cost: Single points of failure create business continuity risks that compound during peak demand, regulatory scrutiny, or competitive pressure.

The EU AI Act requires human oversight for high-risk systems with penalties up to €35M or 7% of global annual turnover—turning architectural safeguards from best practice into compliance requirements.

In Closing

Design Choices Are Safety Choices

Models don’t operate in isolation. They live in complex systems, and their reliability is bounded by the weakest integration point. Good architecture isn’t just technical plumbing — it’s a safety and resilience strategy.

But brittle architectures magnify other risks. They turn misaligned proxies into systemic harm, biased data into operational fragility, and drift into silent outages. Governance is the mechanism that ensures architecture design is treated as a risk surface, not just an engineering detail.