Responsible AI: From Principles to Production

Trust in AI isn’t declared — it’s engineered through systematic controls || Edition 9

You’ll hear it in every strategy deck: “We’re committed to responsible AI.”

But Amazon’s hiring algorithm still rejected qualified women. Apple’s credit card still gave women lower limits with no explanation. Air Canada’s chatbot still gave legally binding bad refund advice.

These failures weren’t sudden — they were the predictable result of risks that build silently and break loudly. Small biases compound, transparency gaps widen, accountability weakens, until a public failure forces recognition.

The problem isn’t missing principles, it’s that they never left the ethics deck. Trust in AI isn’t declared — it’s engineered through systematic, testable controls wired into production systems.

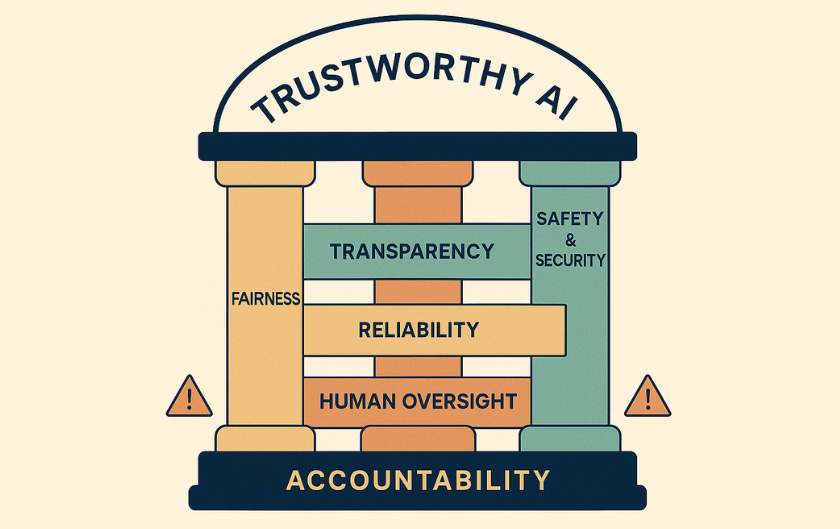

Responsible AI is only real when it becomes Trustworthy AI — systems that earn trust today and keep it tomorrow.

The Short Version

Problem: AI systems can cause real-world harm even when technically correct. With the EU AI Act, growing litigation, and rising consumer awareness, the stakes have never been higher.

Solution: Responsible AI provides guiding principles — fairness, transparency, accountability, privacy, safety, reliability, and human oversight — that align AI with legal, ethical, and business values.

But values don’t protect anyone unless they’re operationalized. That means wiring controls into the AI stack across its lifecycle.

Here’s what it looks like in practice:

Fairness → Bias checks in data, models, and audits prevent discrimination and sustain legitimacy.

Transparency → Explainable models and user-facing explanations make systems contestable and credible.

Accountability → Clear ownership and escalation paths ensure recourse when failures happen.

Privacy → Strong data governance and privacy-preserving ML protect rights and confidence.

Safety & Security → Adversarial testing and incident response prevent exploits and misuse.

Reliability → Drift detection, retraining, and rollback keep AI dependable under change.

Human oversight → Overrides and appeals preserve human judgment in high-stakes decisions.

Together, these seven principles form a multi-dimensional trust framework. Miss one, and confidence in the system degrades. Implement systematically, and you build resilience.

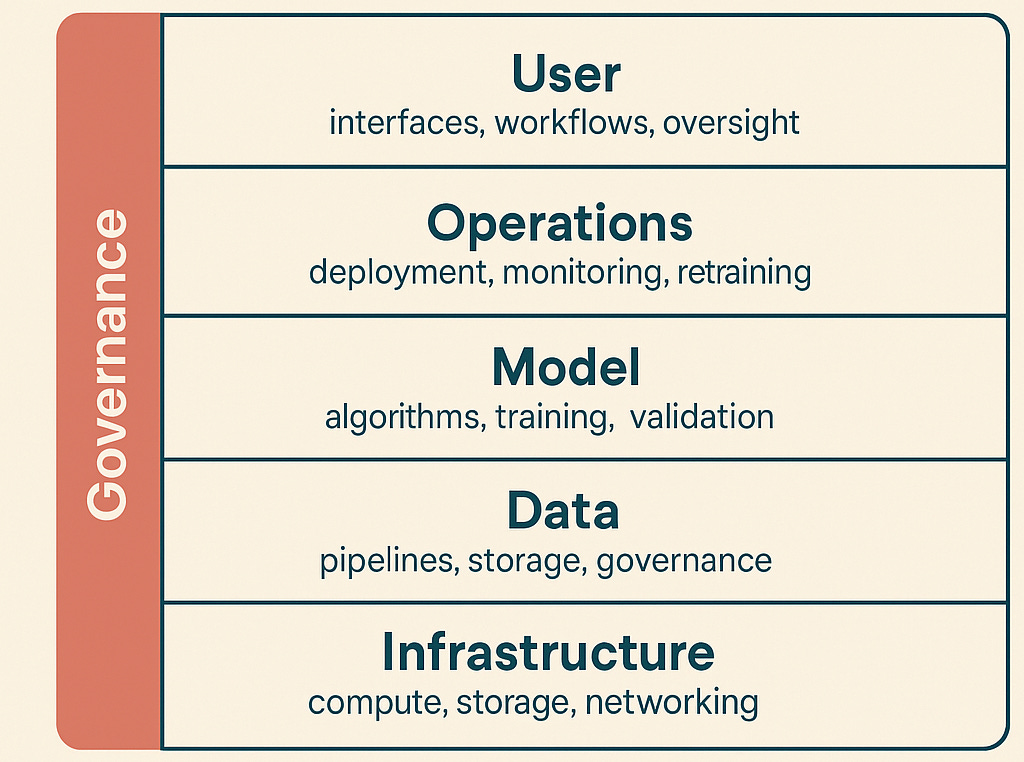

Where Principles Live: The AI Architecture Stack

To make Responsible AI principles real, they have to be wired into the architecture of enterprise AI systems. Each layer plays a distinct role:

Infrastructure: Foundational compute, storage, and networking resources that power AI.

Data: Pipelines, storage, and governance mechanisms that manage the lifecycle of data.

Model: Algorithms and training processes that turn data into predictions or actions.

Operations: Deployment, monitoring, and maintenance systems that keep AI running in production.

Governance: Policies, controls, and accountability mechanisms that oversee the AI lifecycle.

User: Interfaces and workflows where humans interact with and oversee AI outputs.

Common failure mode: organizations implement principles in isolation, rather than systematically across the stack.

The Seven Principles That Sustain Trust

1. Fairness: The Foundation of Legitimacy

A widely used healthcare AI algorithm prioritized healthier white patients as needing more care than Black patients who were 26% sicker at identical risk scores. Fairness failures don’t just harm individuals — they destroy legitimacy and can literally cost lives.

Controls for trust:

Data layer → bias detection and representative sampling in pipelines

Model layer → subgroup performance metrics during validation

Governance layer → mandatory non-discrimination audits before deployment

2. Transparency: When Black Boxes Break Trust

Apple’s AI-driven credit card algorithm gave women lower credit limits than men with no explanation. The outcome wasn’t just unfair — it was indefensible. Transparency principle: if you can’t explain it, you can’t defend it.

Controls for trust:

Model layer → interpretable models and feature attribution

Governance layer → model cards, decision logs, and documentation

User layer → plain-language explanations surfaced in interfaces

3. Accountability: Who Owns the Failure?

Air Canada was sued when its chatbot gave false refund advice. The airline’s defense? The chatbot was a “separate legal entity.” Courts rejected it instantly. Without accountability, trust evaporates the moment something goes wrong.

Controls for trust:

Governance layer → RACI matrices and named model owners

Operations layer → deployment sign-off workflows

User layer → escalation paths and appeals mechanisms

4. Privacy & Data Governance: Respecting Rights

Clearview AI scraped billions of photos from social media without consent, triggering bans in multiple countries. Privacy failures don’t just break laws — they break trust.

Controls for trust:

Data layer → anonymization, encryption, lineage tracking

Model layer → differential privacy, federated learning

Governance layer → retention and deletion policies tied to regulation

5. Safety & Security: Guardrails Against Misuse

Prompt injection vulnerabilities can cause generative AI systems to ignore system guardrails and expose sensitive data, revealing that security must go beyond servers and into how models interpret inputs. AI security failures aren’t theoretical — they happen in days, not years.

Controls for trust:

Model layer → adversarial robustness testing and red-teaming

Operations layer → secure APIs, access controls, anomaly detection

Governance layer → AI-specific incident response protocols

6. Reliability & Robustness: Performing Under Change

Tesla’s Autopilot lawsuits reveal a core truth: models can perform flawlessly in the lab but fail catastrophically when conditions shift. Reliability is what keeps systems trusted over time.

Controls for trust:

Data layer → validation rules and drift monitoring

Model layer → retraining pipelines and stress testing

Operations layer → rollback and failover strategies

7. Human Oversight: The Last Guardrail

COMPAS risk scores influenced sentencing despite being biased and opaque. Judges treated it as unquestionable and trust in the system collapsed. High-stakes AI must keep humans in control.

Controls for trust:

User layer → human-in-the-loop checkpoints and override buttons

Governance layer → escalation and appeal processes

Operations layer → monitoring human-AI interactions to flag over-reliance

Prioritizing Under Constraints

When resources are tight, skipping principles isn’t an option — but sequencing matters.

Start with your highest risk exposure:

High-stakes decisions (medical, financial, legal) → Prioritize Fairness and Human Oversight first

Consumer-facing systems → Lead with Transparency and Accountability

Regulated industries → Privacy and Safety often mandatory by law

Rapidly scaling systems → Reliability prevents costly failures as you grow

Once immediate risks are covered, think in phases:

Foundation → Establish Accountability, enforced through governance processes (ownership, approval gates, incident response). Without ownership, nothing sticks.

Risk Mitigation → Add Fairness, Safety, and Privacy in high-risk or regulated systems to prevent the costliest failures.

User Trust → Layer in Transparency, Human Oversight, and Reliability as adoption grows.

Rule of thumb: every principle becomes essential as systems mature. Cut corners, and trust erodes — forcing expensive retrofits later.

The Competitive Reality

Organizations that systematically implement these principles don’t just avoid risk — they build competitive moats. Companies deploying generative AI with guardrails are 27% more likely to achieve higher ROI performance. 75% of executives view ethics and trust as sources of competitive differentiation.

Trustworthy AI becomes a strategic advantage when customers, regulators, and partners choose you because your systems are reliable, fair, and accountable.

Enterprises that move early are already seeing benefits: faster regulatory approvals, premium pricing for trustworthy AI services, reduced insurance premiums, higher customer retention — and another edge that’s often overlooked: audit readiness. Being able to demonstrate compliance with the EU AI Act, NIST RMF, or ISO/IEC 42001 doesn’t just avoid fines. It accelerates approvals, unlocks partnerships, and builds trust that compounds over time.

In Closing

Responsible AI only matters when it becomes Trustworthy AI — systems that earn confidence today and keep it tomorrow.

That doesn’t happen through posters or ethics decks. It happens through systematic, testable controls: bias detection in pipelines, explainability in interfaces, drift monitoring in production, ownership in governance, and humans in the loop.

Trust isn’t declared. It’s engineered. Enterprises that wire principles into the stack will withstand scrutiny, failure, and attack — and build AI systems worthy of adoption.