Agentic AI 101 — An Executive Primer

A practical guide for executives: components, patterns, risks, and business impact || Edition 17

This post is part of the Agentic AI Series — a multi-part exploration of how autonomous systems are reshaping enterprise architecture, governance, and security. Each post builds from fundamentals to practical design and control frameworks.

The Short Version

What it is: Agentic AI is software that can make intelligent decisions, take action, and adapt—closing loops without waiting for constant human instruction.

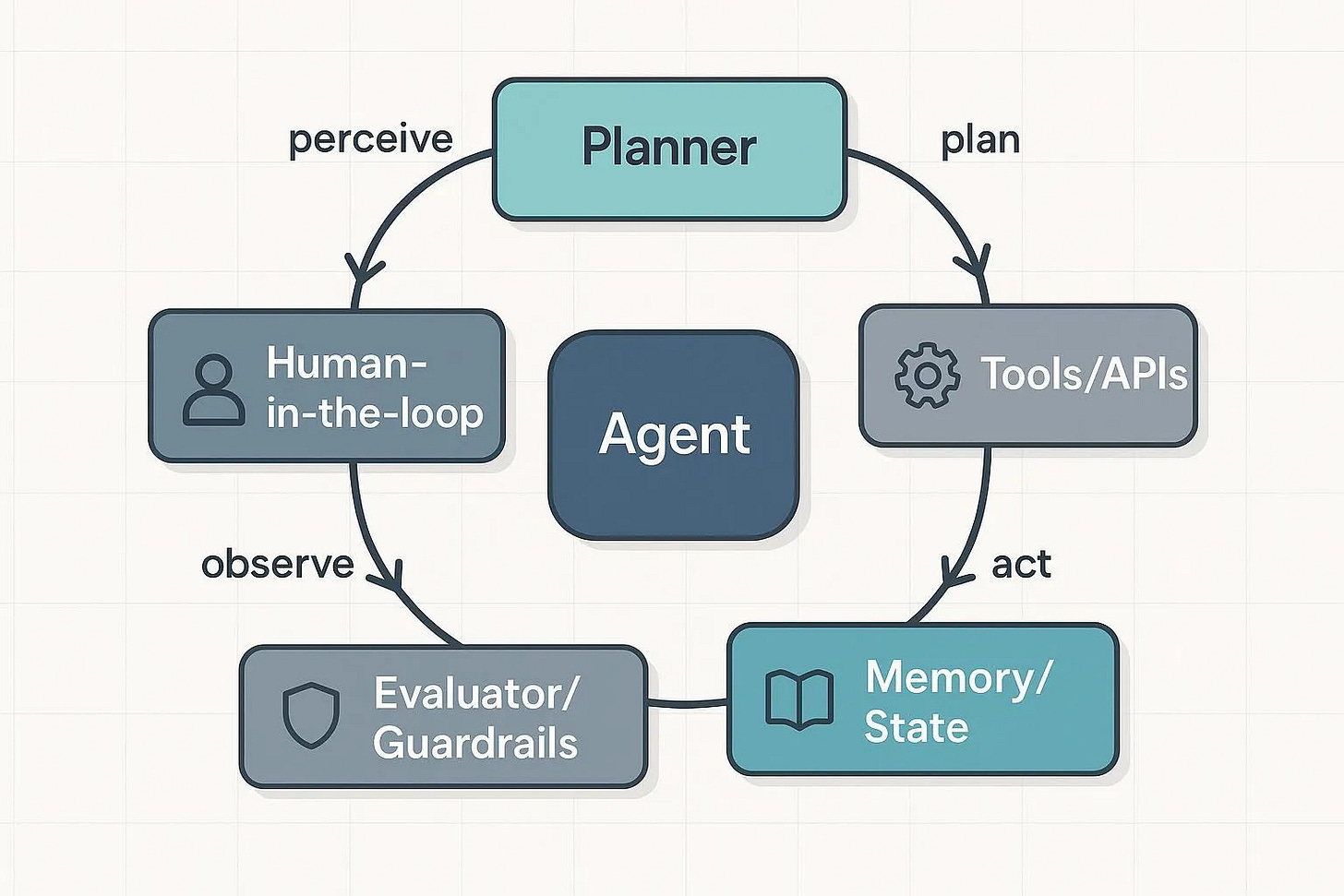

How it works: It runs on four components—Planner, Tools, Memory, and Guardrails—forming a continuous cycle of observe → perceive → plan → act.

Why now: Reasoning, context, connectivity, and governance have matured enough to make safe autonomy possible.

What it enables: Systems that don’t just generate outputs but deliver outcomes across enterprise workflows.

Why it matters: Autonomy without alignment creates drift; alignment without visibility creates bureaucracy. Agentic AI sits in the balance—governed autonomy at scale.

What is Agentic AI?

Agentic AI is a class of artificial intelligence focused on autonomous systems—software that can make decisions and execute multi-step workflows with limited human supervision.

In practice, these systems observe their environment, perceive what’s happening, plan a response, and act, continuously adjusting based on feedback.

At its core, Agentic AI represents a shift from AI as a responder to AI as an actor—systems that can reason, execute, and adapt within clearly defined boundaries.

What Makes an AI System Agentic?

Agentic AI is defined by behavior and exhibits five consistent traits that emerge from how its components interact.

Autonomous Decision-Making: They make context-aware choices without waiting for human instruction.

Goal-Oriented Reasoning: They reason backward from outcomes, creating flexible plans instead of following fixed scripts.

Proactive Behavior: They anticipate opportunities and risks before being prompted, taking initiative within defined limits.

Continuous Learning: They improve through feedback, retaining lessons across cycles while staying under control.

Environmental Adaptability: They maintain performance even as data, conditions, or policies change.

The Core Components of Agentic AI

These are what make the system work, the elements that enable autonomous behavior.

Planner: Decides what to do next by breaking goals into steps and choosing the right tools.

Tools: Execute those steps by acting on systems, data, or processes.

Memory: Tracks what’s happened so far and provides context for future decisions.

Guardrails: Keep actions safe, valid, and reversible through rules, checks, and human oversight.

Toy Example: Pac-Man

If you’ve ever played Pac-Man, you’ve already seen a simple agentic system in action.

In the game, the agents are Pac-Man and the ghosts—Blinky, Pinky, Inky, and Clyde. Each has a distinct goal, a strategy, and shared rules. Pac-Man’s goal is to clear all pellets without being caught. The ghosts’ goal is to catch Pac-Man, each with its own pursuit pattern.

The Planner decides how to move based on what’s visible in the maze.

Tools are simple moves—up, down, left, right—and power pellets that can temporarily flip advantage.

Memory reflects the state of the maze—pellets eaten, paths open, and where threats or opportunities last appeared. Each agent perceives that state differently based on its goal.

Guardrails are the maze walls, movement limits, and the “game over” condition that defines success or failure.

Each loop—observe → perceive → plan → act—updates the environment and reshapes every agent’s decision. The system’s behavior doesn’t come from one agent’s intelligence but from how all agents pursue their goals within shared boundaries.

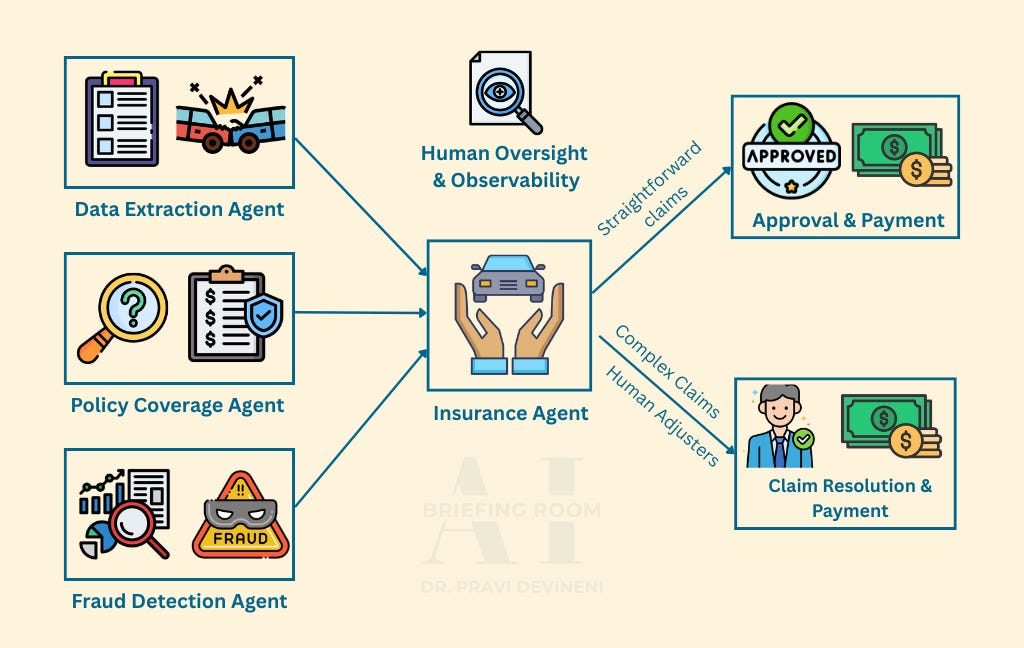

Real-World Example: Insurance Claims

When a claim is submitted, an agent orchestrates multiple AI systems in sequence—one extracts data from forms, another analyzes photos, a third checks policy coverage, and a fraud module compares the case against historical patterns.

For straightforward claims, the agent can approve payouts automatically and trigger payments, while complex cases are routed to human adjusters for review.

The result is a governed, end-to-end process that combines autonomy with oversight—routine work handled by AI, accountability retained by humans.

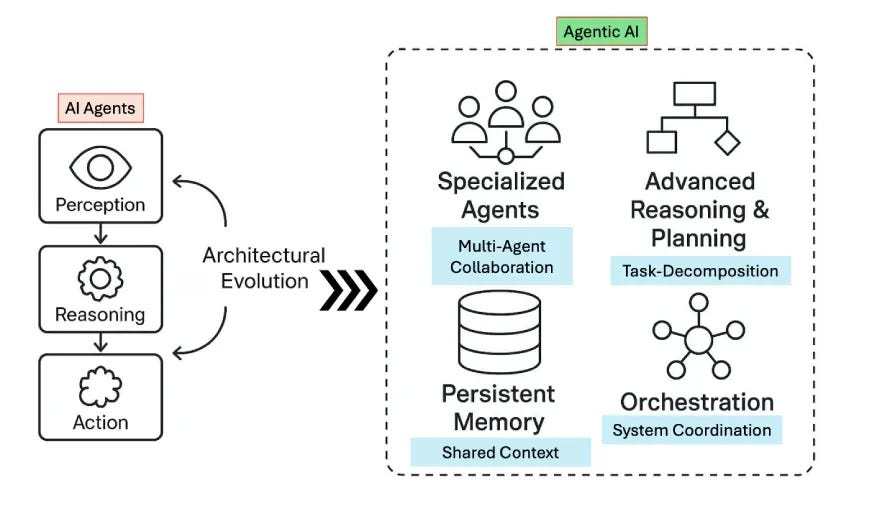

AI Agents vs. Agentic AI

An AI agent is a single-purpose system that performs a specific task — like classifying emails, reconciling transactions, or responding to a user query.

Agentic AI coordinates many such agents and tools toward a broader outcome, reasoning across steps and enforcing shared constraints.

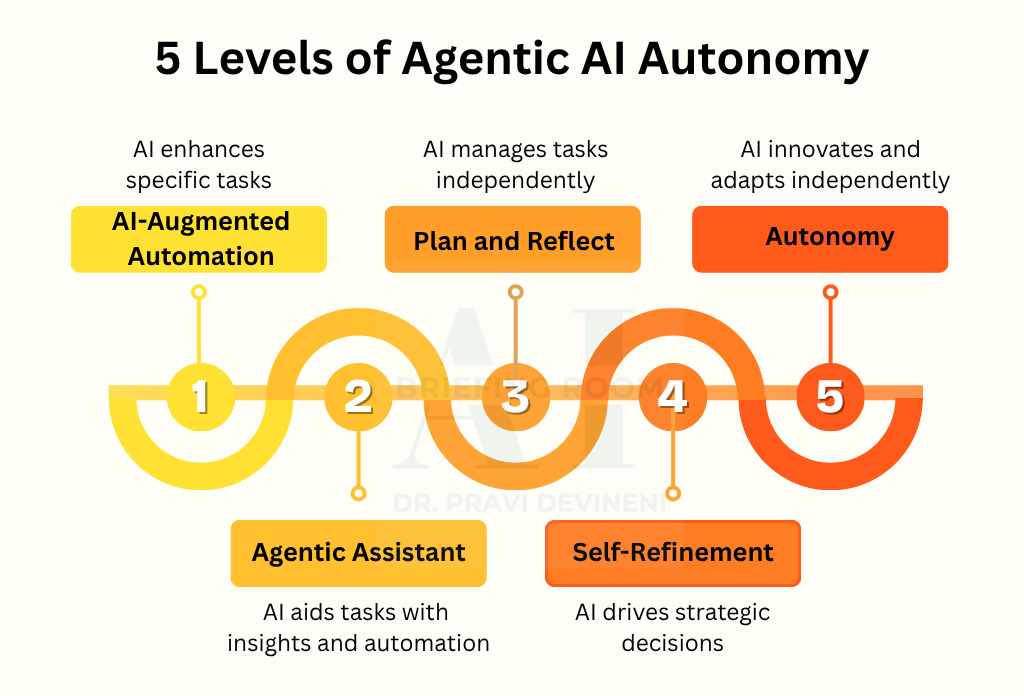

Levels of Autonomy in Agentic AI

A common fear is that every agent acts on its own. In reality, agentic autonomy is a spectrum.

Each level defines how far an agent can go before it must ask—forming a trust radius that expands only as the system proves it can act safely and transparently.

The Role of Alignment — Containing Agentic Risk

Autonomy without alignment is acceleration without steering. In enterprise systems, alignment is the control surface of autonomy—it ensures agents optimize for intent, not just speed or efficiency.

The real risk in Agentic AI isn’t rebellion; it’s precision without purpose.

Misalignment creeps in quietly through systems that execute flawlessly but no longer serve the right goal.

To contain that risk, alignment must be instrumented across four dimensions—each with observable effects that telemetry can surface in real time.

1. Goal Alignment — Preventing Strategic Drift

Keeps agent objectives tied to live business priorities. When strategy shifts but agent goals don’t, performance improves on irrelevant metrics.

Observable Effects: Rising KPIs that no longer correlate with business outcomes; stale goal templates.

2. Workflow Alignment — Preventing System Drift

Ensures actions stay valid within the current enterprise ecosystem—APIs, schemas, and integrations. When systems evolve but agents don’t, silent inconsistencies appear.

Observable Effects: Partial updates, duplicate records, or frequent error retries in execution logs.

3. Constraint Alignment — Preventing Boundary Breach

Defines and enforces agent limits through Policy-as-Code and a clear Trust Radius. Each irreversible action must pass pre-commit checks against allowed tools and privileges.

Observable Effects: Unauthorized writes, access attempts outside scope, or unapproved API calls.

4. Human Alignment — Preventing Accountability Drift

Maintains a traceable chain of human oversight for every autonomous action.

Without clear ownership, you get ghost decisions—outcomes no one can explain or reverse.

Observable Effects: Missing reasoning logs, unassigned owners, or exceptions skipped in HITL review.

Alignment turns autonomy into something observable, governable, and safe to scale.

When goals, systems, constraints, and people stay synchronized—and telemetry makes their interactions visible—Agentic AI doesn’t just act faster; it acts accountably.

In Closing

Agentic AI represents a turning point for enterprise technology—systems that can not only reason but act, learn, and adapt in real time. It offers the chance to shorten cycles, reduce friction, and reimagine how work gets done.

But every breakthrough in autonomy introduces a shadow of uncertainty. The risks aren’t always visible at the edge of deployment—they surface over time, in the quiet spaces between goals, systems, and oversight.

That’s why alignment matters more than control. When autonomy is coupled with observability and grounded in governance, Agentic AI becomes a lever for transformation rather than turbulence.

The opportunity with Agentic AI is extraordinary—but it demands the discipline to guide intelligence we no longer fully script and to remain accountable for what it learns in our name.

Next in the Series: The Architecture of Agency — how perception, reasoning, and orchestration connect to turn intelligence into action.